☰

|

|

| Developer(s) | Apache Software Foundation |

|---|---|

| Written in | Java |

| Operating system | Cross-platform |

| License | Apache License 2.0 |

| Website | hbase.apache.org |

There are many use cases for which the RDBMS makes perfect sense and for ages RDBMS is the go to solution for data storage related problems. After the advent of big data, companies realized the benefit of processing big data and started opting for solutions like Hadoop. Hadoop uses distributed file system for storing big data, and MapReduce to process it. Hadoop excels in storing and processing of huge data of various formats such as arbitrary, semi-, or even unstructured.

Hadoop can perform only batch processing, and data will be accessed only in a sequential manner. That means one has to search the entire dataset even for the simplest of jobs. A huge dataset when processed results in another huge data set, which should also be processed sequentially.Hence not good for record loopkup, not good for updates, not good for incremental addition of small batches. At this point, a new solution is needed to access any point of data in a single unit of time (random access).

Applications such as HBase, Cassandra, couchDB, Dynamo, and MongoDB are some of the databases that store huge amounts of data and access the data in a random manner.

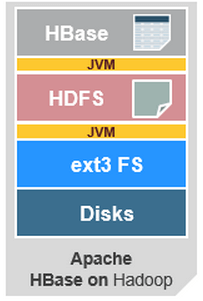

HBase is called the Hadoop database because it is a NoSQL database that runs on top of Hadoop. HBase is a real time, open source, column oriented, distributed database written in Java. HBase is modelled after Google’s BigTable and represents a key value column family store. It combines the scalability of Hadoop by running on the Hadoop Distributed File System (HDFS), with real-time data access as a key/value store and deep analytic capabilities of Map Reduce. It is built on top of Apache Hadoop and Zookeeper.

Apache HBase runs on top of Hadoop as a distributed and scalable big data store. This means that HBase can leverage the distributed processing paradigm of the Hadoop Distributed File System (HDFS) and benefit from Hadoop’s MapReduce programming model. It is meant to host large tables with billions of rows with potentially millions of columns and run across a cluster of commodity hardware. HBase allows you to query for individual records as well as derive aggregate analytic reports across a massive amount of data.

Some of the important features of Apache HBase are:

We will try to understand these features in depth later on.

What is Sharding?

Sharding is a type of database partitioning that separates very large databases into smaller, faster, more easily managed parts called data shards. The word shard means a small part of a whole.

HBase is useful only if:

HBase is not useful if:

| HBase | RDBMS |

|---|---|

| Column oriented | Row-oriented (mostly) |

| Flexible schema, columns can be added on the fly | Fixed schema |

| Designed to store Denormalized data | Designed to store Normalized data |

| Good with sparse tables | Not optimized for sparse tables |

| Joins using MapReduce which is not optimized | Optimized for joins |

| Tight integration with MapReduce | No integration with MapReduce |

| Horizontal scalability – just add hardware | Hard to shard and scale |

| Good for semi-structured data as well as structured data | Good for structured data |

HDFS:

HBase: